In a recent incident, Youtube became the stage for a series of videos featuring the prominent American businessman Michael J. Saylor, renowned founder of the software company MicroStrategy. In what seemed to be an interview on a popular podcast, Saylor made a tempting promise to viewers: the chance to “double their money instantly” in a remarkably simple way. All they had to do was scan a QR Code displayed in the video and make a Bitcoin deposit, with the assurance of receiving double the amount in return.

Quite strange isn’t it? In reality, this seemingly lucrative offer turned out to be nothing more than a sophisticated crypto scam, orchestrated with the help of AI. Despite, at a closer look, the situation might have seemed suspect, it had the potential to deceive many. Especially since Saylor’s carries significant weight among cryptocurrency investors, given his reputation as one of the most fervent advocates for Bitcoin.

Unsurprisingly, the exploitation of the MicroStrategy founder, along with other popular personalities, has grown exponentially in recent times, prompting him and other influential figures to sound the alarm about AI-generated videos.

In this article, we will explain how some of these deepfake crypto scams work, exploring why they are so dangerous and how deepfake detection could play a crucial role in effectively fighting them.

Test your skills in detecting AI-generated images and deepfake videos.

Crypto scams on the rise

The deepfake incident involving Saylor is just one example of the thousands of cryptocurrency scam schemes circulating on the web. With the rapid ascent of AI and deepfakes, the threat is poised to grow, posing new challenges for social media platforms, ordinary citizens, and law enforcement.

The numbers speak for themselves: according to one of the latest reports published by the US Federal Trade Commission (FTC), since 2021 almost 46,000 people have denounced falling victims to crypto scams, resulting in total losses of $1 billion, with an average loss of $2,600 per person (only in the USA). In comparison with the 2018 data, the magnitude of the damage is over sixty times higher. Since not all the victims of crypto scams report their losses, experts believe that the actual figures are far higher.

How does a deepfake crypto scam work

Similar to various fraudulent online activities, crypto scams involve deceitful schemes designed to mislead individuals or entities, often disseminating false information to steal money, personal information or other crucial data.

What sets a crypto scam apart is its focus on cryptocurrencies and the attempt to seize them. According to the FTC, the vast majority of scammers primarily target Bitcoin (70%), while less popular cryptocurrencies such as Tether and Ether are less attractive, at least at the moment.

Tactics employed are diverse, often varying based on the technology used by fraudsters or the type of trap set to deceive the public. In a vast number of cases, the scheme involves a fake video or message asking the victims to click on a link, leading them to perform activities that ultimately grant the fraudster access to their digital wallet or sensible information (such as password and codes). In other cases, the scheme is much more simple, with the victims transferring their cryptocurrency directly to the scammer, mistakenly believing they are making a legitimate investment.

The explosive combination between Generative AI and trading scams

One of the most pervasive types of crypto scam is the so-called “investment scam”, where a deceptive individual contacts you with a message promising a great investment opportunity, contingent upon your adherence to his instructions.

In this context, the use of AI technologies has emerged as the most dangerous method by far, equipping scammers with a toolkit of immense potential. As happened with Michael J. Saylor, with the help of a very inexpensive and perfectly legal AI avatar generator software, scammers can now perform “impersonation scams” and create AI-generated videos featuring virtually every person they desire, including influencers or finance gurus. All they need to do is train the algorithm by providing it with original images and video of the person they want to replicate, and the AI will be able to reproduce an identical avatar clone. In a few minutes, fraudsters can manufacture a deepfake video that can mislead thousands of people.

Even though some of these videos are of low quality and can be spotted at first glance, for example, due to the irregularity of the outlines or the unnatural facial expressions of the avatar, others are virtually impossible to detect without a deepfake detection software.

Romance scams: old playbook, new tricks

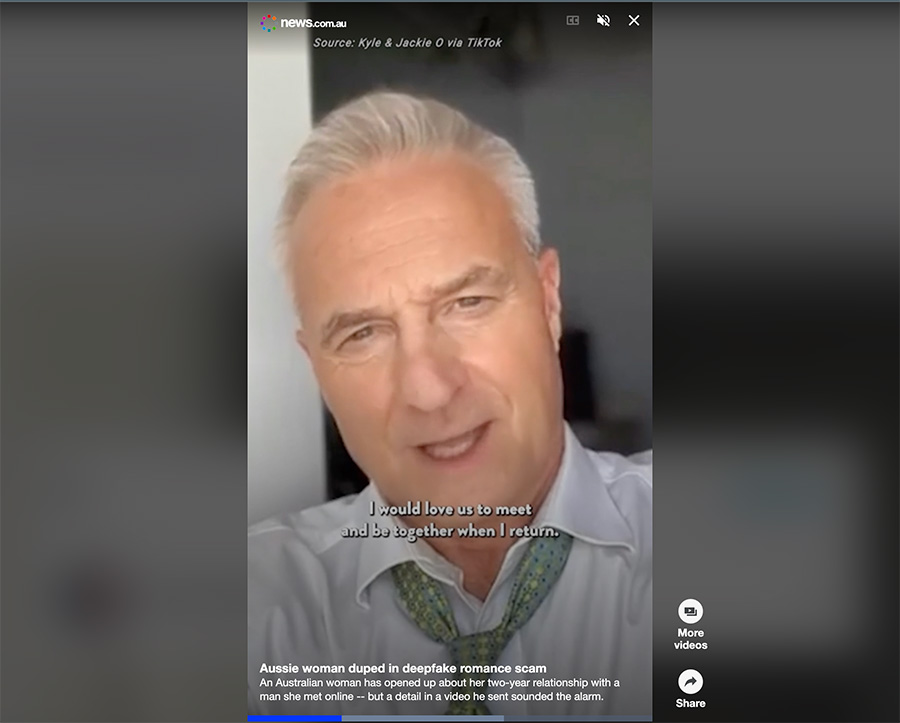

Among the most common crypto scams, there are also romance scams, where fraudsters deceive victims into a fictitious romantic relationship, exploiting them emotionally for money. This particular type of crime employs heinous manipulative techniques that can cause not only economical but also psychological harm to the victim, who may be hesitant to report the scam due to fear of social stigma.

Romance scams began to surge with the advent of social media and experienced significant growth in the era of dating apps such as Tinder and Bumble, in which scammers can easily create fake profiles, stealing both data and money. For the FTC, reported romance scams accounted for losses of $1,3 billion in 2022 alone.

Now, however, deepfakes and AI have taken the game to a whole new level, expanding upon the old playbook. In the past, romance scammers faced difficulty hiding behind a profile picture for too long without raising suspicion. With AI, they can now create entirely new false personas, engaging in video calls or participating in live video chats using deepfake avatars or voice synthesis to communicate with their victims. Even simple written chats have become much more perilous, as AI algorithms can adopt a style designed to forge an emotional connection.

Deepfake detection for Law Enforcement

In light of the scenario we just outlined, deepfake romance and crypto scams present a significant challenge to law enforcement. Combating these cybercrimes requires substantial investment in resources, both human and material, and an ongoing effort to keep pace with ever-evolving technological tactics employed by crypto scammers.

In the battle against these threats, detection software can be a pivotal tool for identifying manipulated content, equipping investigators to address the risks associated with AI and deepfakes.

The influence of these new tools can be compared to the transformative impact of Forensic DNA analysis, which played an essential role in advancing investigating capabilities. In general, just as DNA analysis revolutionized the resolution of many “physical” crimes, deepfake detection has the potential to reshape the landscape of combating digital crimes, providing law enforcement with a powerful instrument that can save a significant amount of time and resources.