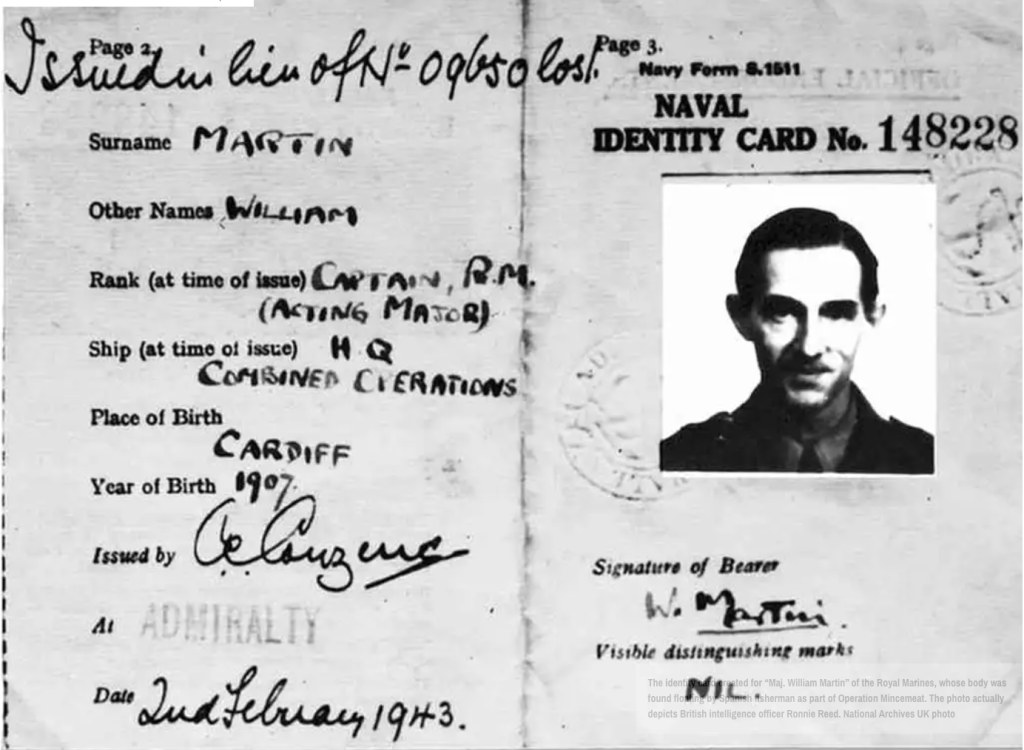

“Operation Mincemeat,” a masterful British deception during World War II, stands as one of history’s most intriguing examples of strategic subterfuge. In this operation, British intelligence cleverly duped Nazi Germany by planting false information on a corpse disguised as a British officer, which was then allowed to fall into enemy hands. This ruse significantly influenced the course of the war by diverting German forces away from the actual Allied invasion point (Sicily).

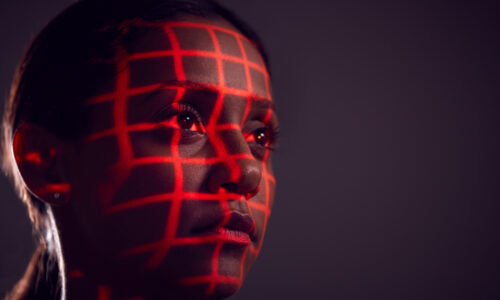

This is a fascinating precursor to the contemporary challenges posed by artificial intelligence (AI) in conflict scenarios. Just as “Operation Mincemeat” exploited the enemy’s trust in seemingly authentic documents, modern AI technologies like deepfakes and generative AI are reshaping the landscape of trust in intelligence and counterintelligence operations. In the context of ongoing conflicts worldwide, the ability to create convincing false information can have profound implications.

AI’s potential to fabricate realistic images, videos, and audio recordings introduces a new era of strategic deception, where discerning truth from falsehood becomes increasingly complex. This shift calls for a reevaluation of how trust and authenticity are established in wartime intelligence, underscoring the need for advanced verification methods and heightened skepticism in the digital age.

The ability to shape the narrative of a conflict, influencing the enemy and the public opinion, has always been crucial to secure a decisive victory. Often, in order to win the hearts and minds of public opinion, the actual truth becomes entangled, weaponized or distorted.

The manipulation of information can take on various tactics, all falling under the umbrella term of “information warfare”. In today’s digital era, this phenomenon has found a new battlefield: the internet. The advent of technologies such as Generative AI has introduced an unprecedented threat to the credibility of media outlets, resulting in the dissemination of disinformation and misinformation, ultimately misleading users, but also intelligence analysts.

Test your skills in detecting AI-generated images and deepfake videos.

Defining the precise boundaries of information warfare is not easy. Broadly, it includes any activity aimed at manipulating information to serve the manipulator’s interest, strategically weaponization news and data to alter reality and news coverage.

The impact of these tactics has been evident in several significant events, from the US presidential elections of 2016 and 2020 where russian propaganda played a crucial role, to recent conflicts in Ukraine and Israel, flooding the internet with misleading information across social media platforms like Facebook, Instagram, Telegram, Whatsapp, X and TikTok. Furthermore, information warfare involves a virtual “clash” of opposing viewpoints deploying conflicting narratives, each attempting to win the argument by swaying as many individuals as possible to their side.

Deepfakes and Generative AI: the arsenal of information warfare

The internet is packed with tricky deepfakes that most people find hard to spot. One of the first studies on the subject, published in 2018 in Science Magazine, discovered that false information posted online spreads faster and reaches a large audience than truthful news.

Media manipulations often include malicious alterations of audio or visual content, mimicking credible sources to deceive users.

“Manufacturing” deepfakes involves the utilization of all sorts of technologies, from simple and inexpensive methods to highly sophisticated ones. However, one doesn’t need to be a tech-savvy-user to create this type of content. Just consider the multitude of manipulated photos or videos portraying public figures (including celebrities, influencers and powerful politicians), easily crafted using tools like Photoshop, Canva or free image generation tools such as Midjourney, Dalle-E and Stable Diffusion. Even a basic chatbot such as ChatGPT can effortlessly churn out volumes of fake news quickly spreading across the web, causing substantial harm with minimal effort. As AI technologies progress, the arsenal available to “information warriors” continues to expand rapidly.

Media manipulation on the battleground with deepfakes and generative AI

The impact of deepfakes, and similar deception techniques used in information warfare on news media was systematically examined for the first time in a 2022 study published in Plos One and conducted by the University College Cork (UCC).

The research focused on the Russian-Ukrainan war, analyzing over 5.000 tweets on X (former Twitter) to observe users’ reactions to various types of deepfake content, that ranged from AI generated videos featuring Russian president Vladimir Putin or Ukrainian president Volodymyr Zelensky giving fabricated speeches, to various edited footage of the conflict. As a result, researchers found out that there is a real lack of “deepfakes literacy” in the web. In other terms, the public was incapable to distinguish many of the deepfakes from legitimate news stories. Furthermore, often the latter were labeled as misinformation, enforcing biases or conspiratorial behaviors.

Moreover, cybercriminals may exploit the images of celebrities or the credibility of established news sources in order to promote online scams. In the UK, for example, they utilized AI to promote a fraudulent Trading app, falsely sponsorship by Elon Musk. To persuade users, they employed an AI generated video featuring a BBC journalist delivering a positive report on the fake sponsorship.

Who are the targets?

Think about every controversial event has unfolded in the past three or four years: COVID-19, conflicts in Europe and the Middle East, elections, and scandals occurring all over the world. For each of these subjects, there always are numerous attempts of news manipulation across the web.

Social media platforms serve as ideal battlegrounds, attracting diverse demographics subjected to tailored content based on their preferences and search history. In this environment, all users are potential victims of media manipulation.

However, information warfare tactics often target specific vulnerable groups, such as individuals with lower education or income, advanced age, or those more susceptible to a particular narrative due to their viewpoints on a specific subject.

Deepfakes Detection: challenging but not impossible

Amidst relentless information wars, falling into the trap of AI-generated images and fake news is easier than one might think, even for people who aren’t typically considered vulnerable users. Take journalists, for instance. Due to the nature of their job, they bear the responsibility of spotting potential news before the general public, requiring them to swiftly identify potential fake news within a short timeframe.

Even with the best intentions, they often fail to do it, because not all of the deepfakes are immediately distinguishable. On the contrary, the majority of such kind of manipulated material, even of lower quality, proves exceptionally challenging to detect.

New threats, new solutions

As AI technology rapidly advances and specific legislation targeting this phenomenon remains absent (at least at the moment), the urgency for deepfake detection has never been greater.

Without dedicated deepfake detection software, their identification will soon become nearly impossible. Yet, there’s also a silver lining: the same AI wonders used to create false information can also be harnessed to detect AI-manipulated, identities, images, audio and videos. The development of these detection methods poses the next significant challenge for a number of entities, including news organizations, institutions and everyday users.

Effectiveness will hinge on the utilization of user friendly apps, tailored to meet the specific needs of various user categories. The next battle against media manipulation has only just begun.